The Memory Construct

The most visible part of the memory system on the computer is composed of the memory module and the insertion slot. These are sometimes loosely referred to as Banks. Each generation and type of memory have a slightly different layout to avoid accidental installation of incompatible modules, risking damage.On each memory module, there are small memory chips called DRAM or are sometimes referred to by a more generic name - Integrated Circuits (IC's). The DRAM core is an array of capacitors called cells, which retain the data (either 0 or 1) by holding on to an electrical charge.

Before any data could be read, the specific capacitor has to be discharged to an amplifier. As a result, every read signal is also a destructive action, which wipes the capacitor of any viable charge. Therefore, additional processes are required to recharge the cells that have been read. This volatile nature is the key difference between RAM technology and Solid State memories such as NAND and NOR.

According to Kingston Memory, for the 1st generation of Double-Data Rate (DDR1) memory system, the average computer takes about 200ns (nanoseconds) to access the Physical Memory compared to 12,000,000ns for the hard disk drive. When converted to a time frame that is more comprehensible, it is like doing a job 60,000 times slower. That is a difference of doing something in 1 minute or doing it in 42 days.

Difference between Cache Memory and RAM

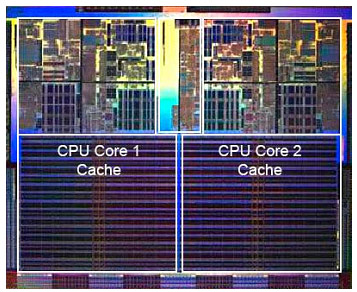

Cache is a type of memory that is usually much faster than the standard system memory such as DDR. These types of memory act like specialized storage areas for frequently requested data to allow for super fast access. They are sometimes casually called “Buffers”, depending on the situation.Within the processor die these cache are segmented based on priorities and access speed: Level 1 (L1), Level 2 (L2) and Level 3 (L3). L1 is the fastest and has the lowest latency, but has the least capacity (Kilobytes). The most frequently accessed instructions and data in DDR memory modules are copied into the L1 cache when the data is being worked on. There are many different responsibilities for cache: some are purely used for data while others are more specialised for command codes or program instructions. All modern desktop CPUs have a dedicated, separate L1 caches for both instructions and data.

The speed of L1 cache can be many times faster than the standard DDR2 or even DDR3 memory. An Intel E6750 paired with a recent Intel X38 chipset has a L1 cache performance of about 42,500MB/s. This is followed by a slightly slower but much larger (Megabytes) L2 cache that performs around 20,500MB/s. Comparatively, the latest dual channel DDR3 memory at 1,333MHz and average latency settings has a bandwidth of around 8,800MB/s.

The speed of L1 cache can be many times faster than the standard DDR2 or even DDR3 memory. An Intel E6750 paired with a recent Intel X38 chipset has a L1 cache performance of about 42,500MB/s. This is followed by a slightly slower but much larger (Megabytes) L2 cache that performs around 20,500MB/s. Comparatively, the latest dual channel DDR3 memory at 1,333MHz and average latency settings has a bandwidth of around 8,800MB/s.Some DDR memory modules use buffering technology to achieve higher reliability and performance. These “Buffered” memories with advance logics and circuitries are dedicated to workstation and server class of computers.

Fully-Buffered DIMM (FB-DIMM) uses an Advanced Memory Buffer (AMB) to improve data transfer, signal integrity and error detection. It was designed to move data in a serial fashion as opposed to parallel stub-bus architecture between the AMB and memory controller. The problem with FB-DIMM is the performance scalability of the design while still within the Thermal Power Design - the extra chip on the memory modules themselves increases the data access latency and heat output quite dramatically.

Common desktop based memory modules are known as “Un-buffered” DIMMs; these use different memory bus technology and is much cheaper in comparison. Readers should note that despite being labelled "DDR2", FB-DIMM's are incompatible with common desktop computers.

Solid State Memory

It is important not to confuse these two very distinctive memories: Volatile RAM technology and Non-Volatile Flash-based memory. Flash (or non-volatile) memories are based on two fundamental technologies – NAND and NOR. Why do we have to consider these types of memory when they are not related to DDR?The future of computer system relies heavily on existing DDR memories working hand-in-hand with non-volatile Flash-based memories to improve overall system performance. Unlike Random Access Memory (RAM) technology, Flash-based memories are capable of retaining data without any constant source of electricity. While their access speed is slower than the current DDR technology, they are generally much faster than the typical mechanical hard drive drive during random read because there are no physical movements.

Microsoft Vista, under ReadyBoost, and future operating systems will use both types of memory hand-in-hand to improve overall system performance. A good example is Intel’s “Turbo Memory” which is based on NAND Flash technology. It was internally known as Intel “Robson” Technology when in pre-production but now Turbo Memory forms an integral part of the latest "Santa Rosa" Centrino platform. It acts like a large cache for frequently used files and applications and is faster than a hard drive due to the minimal random read latency and zero file fragmentation.

Microsoft Vista, under ReadyBoost, and future operating systems will use both types of memory hand-in-hand to improve overall system performance. A good example is Intel’s “Turbo Memory” which is based on NAND Flash technology. It was internally known as Intel “Robson” Technology when in pre-production but now Turbo Memory forms an integral part of the latest "Santa Rosa" Centrino platform. It acts like a large cache for frequently used files and applications and is faster than a hard drive due to the minimal random read latency and zero file fragmentation.Operating systems that support this type of dual storage technology have an advantage at boot time because critical operating system files can be stored in flash-based memory during start-up. Flash-based memory will work more and more intimately with DDR memory systems in future because of the real potential of performance boost. However, in the near term, no computer will rely heavily on this because flash-based memories have fairly limited life span when compared to hard drives and DDR.

Solid State Hard Disk Drives

Full Solid State hard disk drives are an important part of the future because their power requirement is much lower. Secondly, the reliability and survivability in a rugged, mobile environment is far greater than the standard mechanical disk drives. However, the cost per GB is far greater in solid state still which is why it's currently used for low capacity operations like ReadyBoost.

Full Solid State hard disk drives are an important part of the future because their power requirement is much lower. Secondly, the reliability and survivability in a rugged, mobile environment is far greater than the standard mechanical disk drives. However, the cost per GB is far greater in solid state still which is why it's currently used for low capacity operations like ReadyBoost. Generally, Solid State memory is slower than the current DDR technology and may have relatively shorter data-writing life span, although this is rapidly changing as better technology means its life span and densities are being hugely increased, and data throughput rates of sequential reads and writes of the fastest devises now equal or exceed hard drives in certain cases. The life-span of the device depends on the combination of error correction, bit-redundancy, operation logics and self-diagnostic algorithm employed by the manufacturer.

The immediate benefit of using Solid State HDD is the data survivability of any sudden High-G movements because of not having any moving and spinning mechanical systems. Moreover, Solid State HDD have a much lower power consumption and operational temperature making them ideal for notebooks and mobile devises.

Therefore, it is reasonable to expect many failures of the traditional mechanical hard disk drives will be eliminated. Large Data Centres around the world are expected to enjoy huge reduction in operational cost with less intense air-conditioning and fewer catastrophic failures. The market for Solid State memory will be larger than DRAM technology in years to come as costs come down and densities increase.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.